Link: Linear regresssion

Residual sum of squares/sum of squared residuals

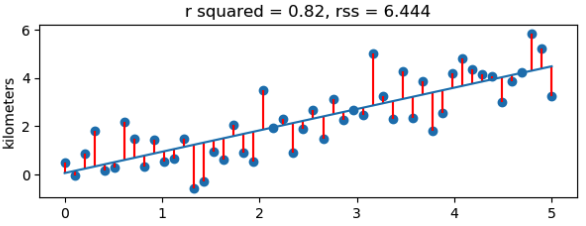

The distance from a line to a data point is called *residual.

Residual sum of squares (RSS)* are the differences between the data and the line. We sum the square of these values, because the square result won’t neglect the positive/negative value.

The more fit it gets, the RSS is smaller.

Notation

In this way, var() can be viewed as the average SS.

Least squares

Least squares is the method to find the best values for a and b in the linear regression model . The name, obviously, comes from “the least of RSS”.

- The concept is to minimize the square of the distance between the observed value and the line.

- This can be achieved by taking the derivative and finding where it’s equal to 0 (by computer).

Note that there’s another similar method called Weighted least squares which we can use in Fitting a curve to data.